On the perils of AI-first debugging -- or, why Stack Overflow still matters in 2025

"My AI hype/terror level is directly proportional to my ratio of reading news about it to actually trying to get things done with it."

This post may not age well, as AI-assisted coding is progressing at an absurd rate. But I think that this is an important thing to remember right now: current LLMs can not only hallucinate, but they can misweight the evidence available to them, and make mistakes when debugging that human developers would not. If you don't allow for this you can waste quite a lot of time!

Getting MathML to render properly in Chrome, Chromium and Brave

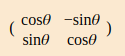

The other day I posted about adding mathematical typesetting to this blog using markdown2, LaTeX and MathML. One problem that remained at the end of that was that it looked a bit rubbish; in particular, the brackets surrounding matrices were just one line high, albeit centred, like this:

...rather than stretched to the height of the matrix, like this example from KaTex:

After posting that, I discovered that the problem only existed in Chromium-based browsers. I saw it in Chromium, Chrome and Brave on Android and Linux, but in Firefox on Linux, and on Safari on an iPhone, it rendered perfectly well.

Guided by the answers to this inexplicably-quiet Stack Overflow question,

I discovered that the prolem is the math fonts available on Chromium-based browsers.

Mathematical notation, understandably, needs specialised fonts. Firefox and Safari

either have these pre-installed, or do something clever to adapt the fonts you

are using (I suspect the former, but Firefox developer tools told me that it was

using my default body text font for <math> elements). Chromium-based browsers

do not, so you need to provide one in your CSS.

Using Frédéric Wang's MathML font test page,

I decided I wanted to use the STIX font. It was a bit tricky to find a downloadable

OTF file (you specifically need the "math" variant of the font -- in the same way

as you might find -italic and -bold files to download, you can find -math

ones) but I eventually found a link on this MDN page.

I put the .otf file in my font assets directory, then added the appropriate stuff

to my CSS -- a font face definition:

@font-face {

font-family: 'STIX-Two-Math';

src: url('/fonts/STIXTwoMath-Regular.otf') format('opentype');

}

...and a clause saying it should be used for <math> tags:

math {

font-family: STIX-Two-Math;

font-size: larger;

}

The larger font size is because by default it was rendering about one third of

the height of my body text -- not completely happy about that, as it feels like an

ad-hoc hack, but it will do for now.

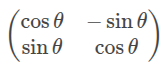

Anyway, mathemetical stuff now renders pretty well! Here's the matrix from above, using my new styling:

I hope that's useful for anyone else hitting the same problem.

[Update: because RSS readers don't load the CSS, the bad rendering still shows up in NewsBlur's Android app, which I imagine must be using Chrome under the hood for its rendering. Other RSS readers are probably the same :-(]

Adding mathematical typesetting to the blog

I've spent a little time over the weekend adding the ability to post stuff in mathematical notation on this blog. For example:

It should render OK in any browser released after early 2023; I suspect that many RSS readers won't be able to handle it right now, but that will hopefully change over time. [Update: my own favourite, NewsBlur, handles it perfectly!]

Here's why I wanted to do that, and how I did it.

Blog design update

I was recently reading some discussions on Twitter (I've managed to lose the links, sadly) where people were debating why sites have dark mode. One story that I liked went like this:

Back in the late 80s and 90s, computer monitors were CRTs. These were pretty bright, so people would avoid white backgrounds. For example, consider the light-blue-on-dark-blue colour scheme of the Commodore 64. The only exception I can remember is the classic Mac, which was black on a white background -- and I think I remember having to turn the brightness of our family SE-30 down to make it less glaring.

When the Web came along in the early 90s, non-white backgrounds were still the norm -- check out the screenshot of the original Mosaic browser on this page.

But then, starting around 2000 or so, we all started switching to flat-panel displays. These had huge advantages -- no longer did your monitor have to be deeper and use up more desk space just to have a larger viewable size. And they used less power and were more portable. They had one problem, though -- they were a bit dim compared to CRTs. But that was fine; designers adapted, and black-on-white became common, because it worked, wasn't too bright, and mirrored the ink-on-paper aesthetic that made sense as more and more people came online.

Since then, it's all changed. Modern LCDs and OLEDs are super-bright again. But, or so the story goes, design hasn't updated yet. Instead, people are used to black on white -- and those that find it rather like having a light being shone straight in their face ask for dark mode to make it all better again.

As I said, this is just a story that someone told on Twitter -- but the sequence of events matches what I remember in terms of tech and design. And it certainly made me think that my own site's black-on-white colour scheme was indeed pretty glaring.

So all of this is a rather meandering introduction to the fact that I've changed the design here. The black-on-parchment colour scheme for the content is actually a bit of a throwback to the first website I wrote back in 1994 (running on httpd on my PC in my college bedroom). In fact, probably the rest of the design echoes that too, but it's all in modern HTML with responsive CSS, with the few JavaScript bits ported from raw JS to htmx.

Feedback welcome! In particular, I'd love to hear about accessibility issues or stuff that's just plain broken on particular systems -- I've checked on my phone, in various widths on Chrome (with and without the developer console "mobile emulation" mode enabled) and on Sara's iPhone, but I would not be surprised if there are some configurations where it just doesn't work.

Writing an LLM from scratch, part 7 -- wrapping up non-trainable self-attention

This is the seventh post in my series of notes on Sebastian Raschka's book "Build a Large Language Model (from Scratch)". Each time I read part of it, I'm posting about what I found interesting or needed to think hard about, as a way to help get things straight in my own head -- and perhaps to help anyone else that is working through it too.

This post is a quick one, covering just section 3.3.2, "Computing attention weights for all input tokens". I'm covering it in a post on its own because it gets things in place for what feels like the hardest part to grasp at an intuitive level -- how we actually design a system that can learn how to generate attention weights, which is the subject of the next section, 3.4. My linear algebra is super-rusty, and while going through this one, I needed to relearn some stuff that I think I must have forgotten sometime late last century...

Writing an LLM from scratch, part 6b -- a correction

This is a correction to the sixth in my series of notes on Sebastian Raschka's book "Build a Large Language Model (from Scratch)".

I realised while writing the next part that I'd made a mistake -- while trying to get an intuitive understanding of attention mechanisms, I'd forgotten an important point from the end of my third post. When we convert our tokens into embeddings, we generate two for each one:

- A token embedding that represents the meaning of the token in isolation

- A position embedding that represents where it is in the input sequence.

These two are added element-wise to get an input embedding, which is what is fed into the attention mechanism. However, in my last post I'd forgotten completely about the position embedding and had been talking entirely in terms of token embeddings.

Surprisingly, though, this doesn't actually change very much in that post -- so I've made a few updates there to reflect the change. The most important difference, at least to my mind, is that the fake non-trainable attention mechanism used -- the dot product of the input embeddings -- is, while still excessively basic, not quite as bad as it was. My old example was that in

the fat cat sat on the mat

...the token embeddings for the two "the"s would be the same, so they'd have super-high attention scores for each other. When we consider that it would be the dot product of the input embeddings instead, they'd no longer be identical because they would have different position embeddings. However, the underlying point holds that they would be too closely attending to each other.

Anyway, if you're reading along, I don't think you need to go back and re-read it (unless you particularly want to!). I'm just posting this here for the record :-)

Michael Foord: RIP

Michael Foord, a colleague and friend, passed away this weekend. His passing leaves a huge gap in the Python community.

I first heard from him in early 2006. Some friends and I had just started a new company and there were two of us on the team, both experienced software developers. We'd just hired our third dev, another career coder, but as an XP shop that paired on all production code, we needed a fourth. We posted on the Python.org jobs list to see who we could find, and we got a bunch of applications, among them one from the cryptically-named Fuzzyman, a sales manager at a building supplies merchant who was planning a career change to programming.

He'd been coding as a hobby (I think because a game he enjoyed supported Python scripting), and while he was a bit of an unusual candidate, he wowed us when he came in. But even then, we almost didn't hire him -- there was another person who was also really good, and a bit more conventional, so initially we made an offer to them. To our great fortune, the other person turned the offer down and we asked Michael to join the team. I wrote to my co-founders "it was an extremely close thing and - now that the dust is settling - I think [Michael] may have been the better choice anyway."

That was certainly right! Michael's outgoing and friendly nature changed the company's culture from an inward-facing group of geeks to active members of the UK Python community. He got us sponsoring and attending PyCon UK, and then PyCon US, and (not entirely to our surprise) when we arrived at the conferences, we found that he already appeared to be best friends with everyone. It's entirely possible that he'd never actually met anyone there before -- with Michael, you could never be sure.

Michael's warm-hearted outgoing personality, and his rapidly developing technical skills, made him become an ever-more visible character in the Python community, and he became almost the company's front man. I'm sure a bunch of people only joined our team later because they'd met him first.

I remember him asking one day whether we would consider open-sourcing the rather rudimentary mocking framework we'd built for our internal unit-testing. I was uncertain, and suggested that perhaps he would be better off using it for inspiration while writing his own, better one. He certainly managed to do that.

Sadly things didn't work out with that business, and Michael decided to go his own way in 2009, but we stayed in touch. One of the great things about him was that when you met him after multiple months, or even years, you could pick up again just where you left off. At conferences, if you found yourself without anyone you knew, you could just follow the sound of his booming laugh to know where the fun crowd were hanging out. We kept in touch over Facebook, and I always looked forward to the latest loony posts from Michael Foord, or Michael Fnord as he posted as during his fairly-frequent bans...

This weekend's news came as a terrible shock, and I really feel that we've lost a little bit of the soul of the Python community. Rest in peace, Michael -- the world is a sadder and less wonderfully crazy place without you.

[Update: I was reading through some old emails and spotted that he was telling me I should start blogging in late 2006. So this very blog's existence is probably a direct result of Michael's advice. Please don't hold it against his memory ;-)]

[Update: there's a wonderful thread on discuss.python.org

where people are posting their memories. I highly recommend reading it, and

posting to it if you knew Michael.]

Writing an LLM from scratch, part 6 -- starting to code self-attention

This is the sixth in my series of notes on Sebastian Raschka's book "Build a Large Language Model (from Scratch)". Each time I read part of it, I'm posting about what I found interesting as a way to help get things straight in my own head -- and perhaps to help anyone else that is working through it too. This post covers just one subsection of the trickiest chapter in the book -- subsection 3.3.1, "A simple self-attention mechanism without trainable weights". I feel that there's enough in there to make up a post on its own. For me, it certainly gave me one key intuition that I think is a critical part of how everything fits together.

As always, there may be errors in my understanding below -- I've cross-checked and run the whole post through Claude, ChatGPT o1, and DeepSeek r1, so I'm reasonably confident, but caveat lector :-) With all that said, let's go!

Do reasoning LLMs need their own Philosophical Language?

A few days ago, I saw a cluster of tweets about OpenAI's o1 randomly switching to Chinese while reasoning -- here's a good example. I think I've seen it switch languages a few times as well. Thinking about it, Chinese -- or any other language written in a non-Latin alphabet -- would be particularly noticeable, because those notes describing what it's thinking about flash by pretty quickly, and you're only really likely to notice something weird if it's immediately visibly different to what you expect. So perhaps it's spending a lot of its time switching from language to language depending on what it's thinking about, and then it translates back to the language of the conversation for the final output.

Why would it do that? Presumably certain topics are covered better in its training set in specific languages -- it will have more on Chinese history in Chinese, Russian history in Russian, and so on. But equally possibly, some languages are easier for it to reason about certain topics in. Tiezhen Wang, a bilingual AI developer, tweeted that he preferred doing maths in Chinese "because each digit is just one syllable, which makes calculations crisp and efficient". Perhaps there's something similar there for LLMs.

That got me thinking about the 17th-century idea of a Philosophical Language. If you've read Neal Stephenson's Baroque Cycle books, you'll maybe remember it from there -- that's certainly where I heard about it. The idea was that natural human languages were not very good for reasoning about things, and the solution would be to create an ideal, consciously-designed language that was more rational. Then philosophers (or scientists as we'd say these days) could work in it and get better results.

There are echos of that in E' (E-Prime), another one I picked up on from fiction (this time from The Illuminatus! Trilogy). It's English, without the verb "to be", the idea being that most uses of the word are unnecessarily foggy and would be better replaced. "Mary is a doctor" implies that her job is the important thing about her, whereas "Mary practices medicine" is specific that it's one just aspect of her. What I like about it is that it -- in theory -- gets a more "Philosophical" language with a really small tweak rather than a complete redesign.

What I'm wondering is, are human languages really the right way for LLMs to be reasoning if we want accurate results quickly? We all know how easy it is to be bamboozled by words, either our own or other people's. Is there some way we could construct a language that would be better?

The baroque philosophers ultimately failed, and modern scientists tend to switch to mathematics when they need to be precise ("physics is a system for translating the Universe into maths so that you can reason about it" -- discuss).

But perhaps by watching which languages o1 is choosing for different kinds of reasoning we could identify pre-existing (grammatical/morphological/etc) structures that just seem to work better for different kinds of tasks, and then use that as a framework to build something on top of. That feels like something that could be done much more easily now than it could in the pre-LLM world.

Or maybe a reasoning language is something that could be learned as part of a training process; perhaps each LLM could develop its own, after pre-training with human languages to get it to understand the underlying concept of "language". Then it might better mirror how LLMs work -- its structures might map more directly to the way transformers process information. It might have ways of representing things that you literally could not describe in human languages.

Think of it as a machine code for LLMs, perhaps. Is it a dumb idea? As always, comments are open :-)

Writing an LLM from scratch, part 5 -- more on self-attention

I'm reading Sebastian Raschka's book "Build a Large Language Model (from Scratch)", and posting about what I found interesting every day that I read some of it. In retrospect, it was kind of adorable that I thought I could get it all done over my Christmas break, given that I managed just the first two-and-a-half chapters! However, now that the start-of-year stuff is out of the way at work, hopefully I can continue. And at least the two-week break since my last post in this series has given things some time to stew.

In the last post I was reading about attention mechanisms and how they work, and was a little thrown by the move from attention to self-attention, and in this blog post I hope to get that all fully sorted so that I can move on to the rest of chapter 3, and then the rest of the book. Rashka himself said on X that this chapter "might be the most technical one (like building the engine of a car) but it gets easier from here!" That's reassuring, and hopefully it means that my blog posts will speed up too once I'm done with it.

But first: on to attention and what it means in the LLM sense.